State-Level AI Legislation: An Evolving Landscape

The rapid development of artificial intelligence has ushered in a new era of legal scrutiny. As states across the nation forge ahead in crafting AI legislation, we see a mix of innovative ideas and tangled issues emerging from legislative halls. In 2025, lawmakers have taken on the challenge of regulating AI, focusing on protecting citizens from potential overreach while trying to harness the technology’s promise. This opinion editorial takes a closer look at the state of AI legislation, highlighting the key areas of focus, the tricky parts of proposed bills, and the ways state governments are attempting to steer through this transformative yet nerve-racking technological frontier.

Understanding the Current Environment of AI Legislation at the State Level

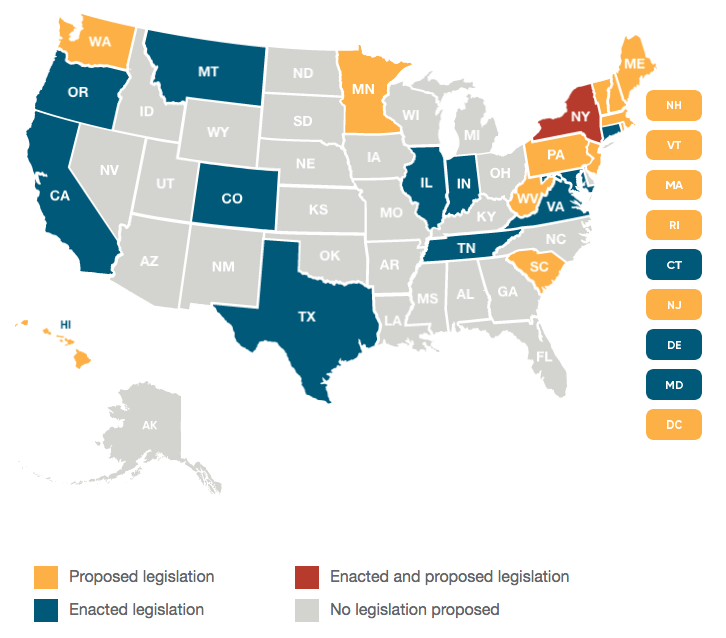

Across the United States, 34 states are actively studying AI, with numerous committees and task forces dedicated to exploring the law’s finer details. In 2025 alone, over 260 measures related to AI were introduced. Amid this legislative activity, many states are addressing concerns about the misuse of AI, from deepfakes to data privacy, and even the potential for nonconsensual intimate imagery. Many of these bills aim to protect citizens, a stance that resonates on a bipartisan level, though with varying approaches that are often loaded with problems and regulatory twists and turns.

At the heart of these efforts is a balancing act: lawmakers must find a path that encourages innovation while ensuring that AI deployment does not lead to unintended harm. In many instances, the measures propose restrictions and disclosures aimed at safeguarding personal rights. With state governments pushing forward, federal actions and proposals – including a recent suggestion for a 10-year moratorium on state-level AI regulation – add an extra layer of complication that everyone is watching with both interest and concern.

Nonconsensual Intimate Imagery, Deepfakes, and Child Sexual Abuse Material: The Tricky Parts of Protecting Citizens

One of the most sensitive pillars of current AI legislation is the focus on nonconsensual intimate imagery (NCII) and child sexual abuse material (CSAM). States such as Maryland, Mississippi, and New Mexico have taken steps to establish strict rules that require online platforms to develop processes to remove synthetic NCII or deepfakes intended to harm individuals. These legislative proposals represent a reaction to the nerve-racking spread of manipulated media, raising awareness about issues that are full of problems for personal privacy and dignity.

When we dig into these proposals, we see that many bills introduce significant penalties for those disseminating nonconsensual images. However, the legislative path has been bumpy, with many NCII/CSAM bills dying in committee due to disagreements over the scope and enforcement mechanisms. A glance at the pros and cons of these proposals reveals:

- Pros: Enhanced protection for vulnerable individuals, a clear mandate for online platforms to act quickly, and a deterrent against synthetic identity abuse.

- Cons: Enforcement challenges, potential conflicts with freedom of expression, and the risk of overbroad definitions that could stifle legitimate content.

These debates underscore that while protection is critical, the legislative details—the little details that define how such laws work—are as tangled as they come. Legislators need to work through these complicated pieces to produce measures that are enforceable and fair, without infringing on other constitutional rights.

Elections and AI: Voting Integrity in the Age of Deepfakes

The use of AI in elections is another area where state lawmakers are working hard to maintain the integrity of democratic processes. Many proposed bills target the potential for deepfake videos and AI-generated content to influence voters. With high stakes and nerve-racking consequences, these bills demand that political advertisements employing synthetic content must include proper disclosures. A New York proposal, for example, requires any political communication that features AI-generated content to clearly state that it is synthetic, thereby protecting voters from misinformation.

Additionally, some states have tackled the issue of using AI to harm political candidates. A Massachusetts bill aims to prohibit the deliberate creation or distribution of deepfake videos aimed at discrediting a rival in the run-up to an election. Although many of these bills remain in committee and may never become law, their introduction reflects an essential public concern: ensuring that artificial intelligence does not play a disruptive role in democratic processes.

A summary of key election-related proposals includes:

- Disclosure Requirements: Mandating that any political content created with AI must disclose its synthetic nature.

- Anti-Deception Measures: Prohibiting the deliberate use of deepfakes to damage the reputation of political figures.

- Timing Restrictions: Stipulating that such measures must be in effect well before elections to preserve their integrity.

These approaches are part of a broader effort by states to manage the confusing bits of technology within the political arena, ensuring that citizens receive transparent and accurate information during the election cycle.

Transparency Measures in Generative AI: Digging Into the Fine Points of Disclosure

Generative AI systems, which can produce human-like text and imagery, are increasingly at the forefront of state legislative debates. Lawmakers are particularly concerned with ensuring that consumers are aware when they are interacting with chatbots or AI systems capable of mimicking human behavior. Hawaii and Massachusetts have introduced proposed legislation that focuses on these fine points, requiring clear, conspicuous notifications to inform users of AI involvement during commercial transactions.

For instance, Hawaii's proposal mandates that companies must alert consumers if a chatbot or any similar AI is handling communications. Moreover, these bills often require companies to establish safeguards, such as red teams tasked with testing the robustness of any watermarks applied to AI-generated content. These measures are designed to combat the risk that such watermarks may be easily removed, which could otherwise lead to deceptive practices.

The benefits and challenges associated with these transparency measures include:

- Consumer Awareness: Ensuring that individuals are not misled by AI without knowing it, which builds trust in commercial interactions.

- Accountability: Requiring companies to monitor their own systems and adjust policies as misuse is discovered, which is essential for long-term regulation.

- Enforcement Issues: Determining how to effectively penalize companies that fail to provide adequate notice can be confusing and might result in further legal disputes.

By pushing for transparency, legislators are taking a proactive approach, aiming to clear up the hidden complexities of AI use in everyday transactions. Such steps are pivotal in preventing a scenario where unsuspecting consumers might otherwise be their own worst enemy in an era of synthetic interactions.

High-Risk AI and Automated Decision-Making: Managing Your Way Through the Complicated Pieces

One of the most critical topics addressed by state legislation is the regulation of automated decision-making technology (ADMT) and high-risk AI systems. These tools, which are increasingly used in sectors ranging from finance to public safety, carry the potential for both beneficial innovation and unintended harms. A number of states have taken cues from Colorado’s AI Act, which imposes strict rules for algorithmic transparency, accountability, and non-discrimination. The Colorado model serves as a touchstone for other states such as Georgia, Illinois, Iowa, and Maryland.

Many of these proposals include requirements such as:

- Ensuring that AI is identified as a significant factor in consequential decisions.

- Mandating that entities implement transparency and accountability protocols.

- Providing channels for individuals to ask for an explanation of decisions that impact them.

While these measures represent a key step toward protecting citizens, they also introduce a series of tricky parts. There is a risk that overly complex regulations could stifle innovation, while overly lenient rules might leave gaps where harmful practices can persist. Thus, policymakers are faced with the challenge of managing their way through numerous regulatory twists and turns to strike the right balance between protection and progress.

A table summarizing some of the high-risk AI proposals is provided below:

| State | Main Focus | Status |

|---|---|---|

| Colorado | Algorithmic transparency and accountability in high-stakes decisions | Law in effect |

| Georgia | Modeled after Colorado, with a focus on limited government use | Died in committee |

| Illinois | Broad accountability measures and consumer rights | In committee |

| Maryland | High-risk AI in consequential decisions | Died in committee |

This table illustrates the differing fates of similar proposals across states and highlights the variety of approaches being tried—some more comprehensive than others. The ongoing debate underscores the essential need for laws that are robust enough to provide protections without inadvertently causing innovation to stall.

Government Use of AI: Finding Your Path Through Public Accountability

The use of AI by government entities themselves has come under increased scrutiny. As government agencies experiment with AI for functions ranging from tax collection to public service delivery, lawmakers have introduced proposals to ensure that the public is always in the loop. In states like Georgia, Montana, and Nevada, legislation is being crafted to establish oversight boards, require human review of decisions, and mandate clear disclosures when AI is used by state or local governments.

A closer look at these initiatives shows two distinct streams of thought:

- Proactive Governance: Laws that require the creation of oversight bodies to monitor AI deployment, ensuring that government decisions remain transparent and accountable.

- Protective Measures: Bills that focus on curbing excessive reliance on AI in decision-making, by requiring human oversight on critical decisions and clear communication to citizens.

For example, a Nevada bill proposes that the Department of Taxation must notify taxpayers when AI systems are involved in their interactions. Meanwhile, Montana’s recently signed legislation limits AI use by state and local government, requiring that AI-driven recommendations receive human review. These measures are seen as key steps in preventing situations where the confusing bits of automated government decisions could harm citizens’ trust in public institutions.

It is essential that state legislators maintain oversight of government-led AI initiatives. Without such checks, there is a real danger that widespread reliance on AI could erode public trust, leaving citizens with only a vague understanding of how critical decisions are made.

Employment and Workplace Surveillance: Protecting Workers from Overreaching Technology

Employment issues are another area where AI legislation shows mixed approaches. Several states have introduced bills designed to ensure that the use of AI in hiring and workplace surveillance does not infringe on workers’ rights. These measures are meant to address the nerve-racking possibility that AI could be used to unfairly filter or monitor applicants and employees.

For instance, proposals in Illinois and Pennsylvania require that employers notify job candidates when AI is used in decision-making processes during interviews. Likewise, laws in California have put limitations on the use of AI for workplace surveillance. The goal here is clear: to prevent algorithmic decisions from putting employees at a disadvantage without their knowledge.

Some of the key points in these employment-related bills include:

- Transparency: Employers must disclose when AI is used in hiring or performance assessments.

- Data Protection: New regulations aim to secure personal information from misuse in AI systems.

- Worker Consent: Legislation often emphasizes that workers should be informed and give their consent to any AI-driven monitoring or decision-making process.

As companies look to integrate AI-driven technologies in the workplace, the legislative landscape continues to evolve. Employers and employees alike must figure a path through these measures to ensure that innovation does not come at the expense of fairness or privacy in the workplace.

Healthcare and AI: Tackling the Overwhelming Challenges in Treatment Decisions

Healthcare represents one of the most critical yet controversial areas for AI deployment. With treatment and coverage decisions increasingly influenced by AI systems, states are stepping in to set clear guidelines. California, Illinois, and Indiana have all introduced legislative proposals aimed at ensuring that AI never substitutes for human judgment in medical decisions.

In California, lawmakers have proposed bills that explicitly bar the use of specific language suggesting that AI systems are licensed or certified to practice healthcare. Meanwhile, Illinois and Indiana have debated measures that would either ban the use of AI for making therapeutic decisions or require full disclosure when AI is involved in patient care decisions. The primary concern is that reliance on AI could lead to outcomes that lack the nuance of human expertise, resulting in potentially dangerous or inappropriate treatment choices.

Highlights from the healthcare-related proposals include:

- Prohibition of AI in Therapeutic Decisions: Certain bills propose that licensed health care professionals are not allowed to rely solely on AI when making treatment recommendations.

- Mandatory Disclosures: Health care providers must inform patients of any AI involvement in their diagnosis or treatment planning.

- Patient Protections: Regulations are in place to ensure that AI does not replace personal interactions between patients and professionals, safeguarding the human element in care.

These proposals are a response to the overwhelming challenges presented by integrating AI into an industry where even small errors can have huge, life-altering impacts. Lawmakers are taking a cautious approach, keenly aware of the potential for AI to both improve healthcare and introduce new risks. By addressing these issues head-on, states demonstrate their commitment to protecting patient well-being in an era of rapid technological change.

Federal vs. State Jurisdiction: The Ongoing Battle Over AI Regulation

While state governments push forward with a myriad of AI-related bills, apprehensions about federal overreach continue to simmer in legislative corridors. Recently, Republican lawmakers in the U.S. Senate floated a proposal to institute a 10-year moratorium on state-driven AI regulation—a move that, while not ultimately enacted, signals the tension between state innovation and federal control. This federal-state tug-of-war underscores the urgent and slightly confusing bits of jurisdictional questions that remain unresolved.

Key issues at the federal level include:

- Monitoring State Legislation: With the federal AI Action Plan directing bodies like the FCC to keep a close eye on state laws, there are concerns that federal intervention may dilute state-level protections.

- Balancing Power: States, often more nimble on local issues, have the advantage of tailoring laws to address community needs. However, excessive federal oversight could blur these efforts, leading to a one-size-fits-all approach that may not work uniformly across diverse jurisdictions.

- Future Directions: The battle is just beginning. Uncertainty about the federal government's final stance means that state legislators need to remain vigilant and proactive in crafting laws that can stand the test of national scrutiny.

The interplay between federal ambitions and state initiatives represents a significant challenge. Lawmakers on both levels must work together—or at least find ways to coexist—in order to ensure that the regulatory framework for AI is both robust and adaptable, ultimately benefiting society at large.

A Comparative Look: Piecemeal vs. Comprehensive Approaches to AI Regulation

Not all states are embracing a single framework for governing AI. While some, like Colorado, have pursued an all-encompassing approach with measures addressing algorithmic discrimination, consumer rights, and AI accountability, others prefer a more targeted or piecemeal strategy. California, for example, started with an ambitious comprehensive bill that was later vetoed, prompting a shift toward a patchwork of narrower laws that address issues like election deepfakes, digital replicas of performers, and training-data disclosures.

Let’s break down the contrasting strategies:

- Comprehensive Legislation:

- Pros: Provides a unified framework, reduces gaps between related issues, and sets clear expectations for AI developers and users.

- Cons: May be too rigid, with little room for adjustment as the technology evolves, and can be intimidating for industries still in the early stages of AI integration.

- Piecemeal Legislation:

- Pros: Offers flexibility, allowing states to address specific issues as they arise, and can be less overwhelming for emerging technologies.

- Cons: Risks creating a patchwork of inconsistent laws that may be hard to navigate, with fine shades of regulatory differences from state to state.

Each method has its merits and drawbacks. The piecemeal strategy lets lawmakers focus on isolated, pressing concerns—such as deepfakes in elections or AI-based consumer fraud—without getting bogged down by every subtle part of AI’s potential risks. Conversely, comprehensive regulation sets a benchmark for uniformity and broad-based accountability, though it may struggle to cope with the fast pace of technological change.

Charting the Future Path: Opportunities and Obstacles Ahead

The wave of state-driven AI legislation marks a significant turning point in how American society addresses technological innovation. As ballots and committee rooms become the frontline for AI policy, the following observations emerge regarding the future direction of these efforts:

- Ongoing Innovation vs. Citizen Protection: Lawmakers are compelled to find a reasonable balance between fostering innovation and shielding citizens from potentially harmful practices. Striking this balance is not easy, as every proposed law must contend with the twisting issues of unexpected consequences and enforcement challenges.

- Interstate Variability: With legislation evolving at different rates across states, businesses and consumers alike face the challenge of getting around a landscape that’s both varied and filled with subtle details. Companies must figure a path through these varying standards to remain compliant while continuing to innovate.

- Federal Influence and Coordination: The interplay between state efforts and potential federal oversight remains on edge. As the FCC and other federal bodies monitor state laws, there is a chance for future coordination—or even conflict—that could reshape the regulatory environment entirely.

- Public Engagement: Voter and consumer reactions play a critical role in shaping final outcomes. Continuous public input and expert commentary will be necessary to refine these laws so that they truly serve the common good.

Looking ahead, states are likely to continue acting as testing grounds for AI regulation. The lessons learned from these diverse approaches will eventually inform a more coherent and nationally consistent framework. As policymakers dig into each issue—from consumer transparency to workplace surveillance—the ultimate goal is to craft legislation that not only protects citizens but also supports the ethical evolution of technology.

Challenges in Enforcement and the Role of Judicial Oversight

No legislative framework is complete without addressing the final, often nerve-racking step: enforcement. As state laws on AI become more detailed and far-reaching, there is an inherent risk that the enforcement mechanisms may lag behind. Courts will need to interpret new regulations, and judicial oversight will be essential in resolving disputes over how the laws are applied.

Challenges in enforcement include:

- Establishing clear guidelines for what constitutes non-compliance, especially when AI presents a range of unpredictable behaviors.

- Ensuring that penalties are proportionate to the offense, without stifling innovation in AI research and application.

- Managing resource limitations for oversight bodies that may struggle to keep up with rapidly advancing technologies.

Judicial oversight will be called upon to manage cases where the fine shades of regulatory intent come into conflict with industry practices. As judges work through these cases, they will need to be particularly sensitive to the little twists that underpin each law, ensuring that both citizens and innovators receive fair treatment under the new AI regulatory regime.

The Impact on Industry: Preparing for a Regulated AI Future

Businesses that employ AI technology are now facing a regulatory environment that is both evolving and unpredictable. For companies operating in multiple states, the challenges of complying with a patchwork of laws cannot be overstated. From technology developers to financial institutions integrating AI in risk assessments, every sector must equip itself to figure out a path through this maze of requirements.

Key strategies for industry adaptation include:

- Investing in Compliance Infrastructure: Developing robust internal measures to ensure that AI systems meet new disclosure and operational standards.

- Fostering Industry Partnerships: Collaborating with peers and regulatory bodies to create best practices that can ease the adoption of AI regulations.

- Staying Informed: Constant monitoring of state and federal legislative changes to quickly adapt policies and technologies in response to new requirements.

- Engaging with Policymakers: Actively contributing to discussions on AI regulation to ensure that business perspectives are considered alongside citizen protections.

With challenges that are both overwhelming and full of unexpected twists, industries must remain agile. Over time, as legal precedents are established and best practices emerge, the landscape of AI regulation is likely to become less confusing and more navigable for all parties involved.

Comparative International Perspectives on AI Regulation

While state lawmakers in the United States are busy figuring a path through domestic challenges, many other nations are also actively legislating on AI. From technology-focused initiatives in China to their more citizen-centric approaches in Switzerland, the global map of AI regulation defines a diverse set of priorities. Internationally, AI plans vary significantly—some are highly focused on defense or security, while others prioritize broad societal betterment and data privacy. These differences offer learning opportunities for U.S. states as well as an indication that no single model fits all scenarios.

Three international examples illustrate these differences:

- China: Emphasizes the competitive advantage of AI in defense and economic growth, integrating strict state control measures.

- Switzerland: Focuses on using AI for societal improvement, advancing policies that protect individual privacy and promote ethical standards.

- India: Balances rapid technological growth with attention to human talent development and digital inclusion, often inspiring consortium models in international partnerships.

These international perspectives offer useful insights on managing the little details and twisted issues of AI regulation. U.S. states may take a page from these global playbooks, adopting measures that promote ethical AI development without overly stifling the potential benefits of the technology.

Lessons Learned and the Road Ahead

Reflecting on the legislative initiatives of 2025, one clear thread runs through all the debates: a deep commitment by state lawmakers to protect citizens from potential AI overreach. Whether it’s the battle against synthetic nonconsensual imagery, the fight to ensure election integrity, or safeguarding the workplace and healthcare decisions, each proposal is an attempt to manage its way through the complicated pieces of emerging technology.

Key takeaways from this period of intense legislative activity include:

- Citizen Protection Remains Central: Despite differences in approach, the primary focus is safeguarding the public from the unintended consequences of AI.

- Regulatory Diversity is Both a Strength and a Challenge: The state-by-state approach allows for localized experimentation, yet it also creates an uneven landscape that could prove confusing for a national market.

- Innovation Demands Flexibility: Policymakers must ensure that laws do not become overly intimidating or rigid, which might slow down beneficial technological advancements.

- Collaboration is Crucial: Ongoing dialogue between states, industry, and federal government will play a key role in shaping smart, effective regulation.

It is essential for regulators, legislators, and industry leaders to continue these discussions, regularly re-evaluating laws as AI technology evolves. Achieving a balance that is both protective and growth-oriented is not an easy feat—the process is full of twists and turns, and the hidden complexities of AI remain a constant challenge.

Concluding Thoughts: Steering Through a Complex Yet Promising Future

As we watch the evolution of AI legislation at the state level, it is clear that the challenges ahead are both overwhelming and riddled with tension. The experiences of 2025 underscore the need for lawmakers to work through the confusing bits of how AI is used in various sectors—from nonconsensual imagery and elections to government use, employment, and health care.

There is no single solution to the problematic challenges presented by artificial intelligence, but one thing is evident: states are determined to act in the interest of their citizens. In doing so, they are not only safeguarding privacy and human rights but also shaping a regulatory environment that may ultimately position the United States as a model for ethical AI development.

Whether you are an industry leader, a policymaker, or simply a citizen watching these changes unfold, the future of AI regulation is one where continuous dialogue, adaptable policies, and a commitment to transparency will be key. As we look ahead, the goal remains to clear the nerve-racking uncertainties and make our way through this rapidly evolving landscape with care, collaboration, and a commitment to making informed, balanced decisions.

In the coming years, the interplay between state innovation and federal oversight will be closely watched. The outcomes of these legislative experiments will not only determine the pace of AI adoption but will also set a precedent for how societies can embrace technology while preserving fundamental human rights and ensuring accountability across all domains.

As states continue to develop their AI laws, we can expect a continued evolution of strategies—from comprehensive frameworks to narrow, sector-specific bills. The battle between federal and state regulation might indeed prove to be on edge, but it is clear that proactive legislation at the state level forms the backbone of any long-term solution to the challenges associated with artificial intelligence.

Ultimately, the objective is to forge a path through this maze of policies that is as clear and accessible as possible—a path that both protects individuals and fosters innovation. For those invested in the future of AI, understanding these developments is not just an academic exercise; it is a critical part of ensuring that technology serves humanity’s best interests.

The road ahead is undoubtedly full of unexpected twists and nerve-racking regulatory challenges. Yet, with thoughtful policymaking and a willingness to engage with the fine points of each proposal, we are witnessing the early stages of what could become one of the most transformative governance efforts of our time.

In closing, as we digest the legislative activity of 2025, let us remain mindful that the aim is to cultivate an environment where AI can help improve our lives, provided that it is harnessed responsibly and ethically. The ongoing dialogue among lawmakers, industry leaders, and the public will be essential in achieving this delicate balance. Only through a collaborative and nuanced approach can we ensure that artificial intelligence remains a tool for progress rather than a source of new, daunting challenges.

Originally Post From https://www.brookings.edu/articles/how-different-states-are-approaching-ai/

Read more about this topic at

States Can Continue Regulating AI—For Now | Brownstein

US state-by-state AI legislation snapshot

No comments:

Post a Comment