Understanding the “Too Many Requests” Phenomenon in Web Law

The error message “Too Many Requests” might seem like a simple technical snarl, but it opens the door to a broader discussion about the legal and regulatory challenges in the digital age. As websites and online platforms increasingly face issues with traffic and automated interactions, the legal community must take a closer look at how this seemingly routine message reflects deeper questions of responsibility, fairness, and the balance between innovation and control.

This editorial aims to explore the subject matter from several angles. We will get into the legal views surrounding automated data collection practices, dissect the responsibilities of website operators, and weigh the impact on individual users and companies alike. In doing so, we will rely on common language and relatable examples that help illuminate the tricky parts of our rapidly evolving digital world.

Examining the Legal Implications of Automated Requests

At its core, the “Too Many Requests” error is an indicator that a server has been overwhelmed by a barrage of input, often coming from automated systems or bots. This raises several legal considerations. First and foremost, there is the question of whether automated activity should be regulated. As our discussion pokes around the subject, it raises issues related to intellectual property, unauthorized access, and even potential breaches of contract.

Many websites have terms of service that explicitly restrict the use of automated systems to gather or manipulate data. When a user or a bot exceeds these limits, the website may respond with a “Too Many Requests” error as a form of self-regulation. This may sound like a technical response, but it underscores legal principles about consent and breach of contract. Users who knowingly disregard such terms—often found in click-wrap or browse-wrap agreements—could potentially be held legally accountable.

Furthermore, in cases where excessive automated requests cause harm, such as server slowdowns or the disruption of services for legitimate users, providers might seek legal remedies. The potential damages include financial loss, damage to reputation, and interruption of critical services. The legal landscape here is full of problems, as courts must balance the rights of data collectors with the operational needs of websites.

Beyond contractual issues, the use of bots to circumvent technical safeguards may also run afoul of computer fraud and abuse laws. These laws, which vary by jurisdiction, can impose severe penalties for unauthorized access to computer systems. Therefore, both users and developers need to be aware that the occasional “Too Many Requests” message could be the first sign of legal trouble if larger, more systematic abuses occur.

Digital Access Constraints: A Closer Look at Server Limitations and Legal Rights

When a website returns a “Too Many Requests” error, it is often a signal that the server is overloaded or that safeguards are in place to protect its resources. These technical limitations are not just operational issues—they have significant legal dimensions as well.

For one, resource allocation online is critical to maintaining service reliability. Websites that offer vital services, such as online banking, healthcare platforms, or governmental portals, must ensure that their systems remain accessible under heavy loads. Therefore, implementing request throttling measures becomes super important not only in the technical sense but also legally, given the high stakes involved in ensuring continuous availability of service.

From a legal perspective, server administrators must find a path that balances operational efficiency with the need to accommodate legitimate user activity. This balance is particularly challenging when the traffic includes a mix of real users and automated systems. Sometimes, what appears to be an innocent surge in traffic might, in fact, be an orchestrated effort by a third party to disrupt the service, which might open the door to legal actions under cybersecurity regulations.

In many cases, detailed service level agreements (SLAs) are drafted to mitigate legal risk. These agreements clearly outline what constitutes acceptable usage, the circumstances under which service may be interrupted, and the recourse available to both parties. For instance, providers might include clauses that specifically allow for temporary bans or rate limiting to protect the server. Though such clauses are often presented in a straightforward manner, the fine points of enforcing them can be confusing bits of law that require scrutiny, especially when disputes arise.

Balancing Public Interest and Proprietary Rights in Data Requests

A central concern in the debate over automated web requests is the reconciliation of public interest with the proprietary rights of website owners. This balance becomes particularly key when the requests are made by entities aiming to contribute to public knowledge, such as academic researchers or public policy advocates.

On one hand, there is a strong public benefit argument for providing vast amounts of data, such as increasing transparency or fostering new insights through data-driven research. On the other hand, website owners frequently invest significant time, money, and expertise in building and maintaining their platforms and proprietary databases. Consequently, they argue that their resources should not be exploited without fair compensation or permission.

This tension is often addressed through legal doctrines such as fair use and data scraping regulations. However, the application of these principles is frequently tangled with issues of jurisdiction and consent. In many instances, whether automated data collection constitutes a breach of the law can depend on the specific context, the volume of data requested, and how that data is ultimately used.

For example, educational institutions have, in some cases, defended the practice of scraping data for non-commercial research purposes by relying on arguments that focus on the public good. However, these cases are typically on edge, and legal opinions vary widely. The case law in this area remains loaded with issues, and future rulings will likely shape the contours of data rights online.

Below is a summary table that highlights some of the key considerations regarding automated requests and legal rights:

| Aspect | Considerations |

|---|---|

| Server Resources | Ensuring that systems remain accessible; balancing load distribution between automated and human-originated traffic. |

| Terms of Service | Explicit restrictions on automated data collection; contractual obligations and potential breach ramifications. |

| Legal Precedents | Interpretations of fair use; implications of computer fraud and abuse laws. |

| Public Interest | Data transparency debates; ethical research practices and the limits of non-commercial data usage. |

| Security Issues | Risks related to deliberate denial-of-service attacks and misuse of automated systems. |

Legal Precedents and Regulatory Framework in Managing Excessive Requests

The legal landscape concerning automated requests is still evolving, and several pivotal cases have begun to shape the discussion.

Court decisions have sometimes underscored the importance of contract law in adjudicating disputes over automated access. For instance, when a website clearly communicates its policies and a user—or an automated system—chooses to ignore these instructions, the court is more likely to side with the website operator. The rationale is that consent, once given through agreement to the terms of service, should be respected. However, not all jurisdictions view these agreements in the same light; what might be seen as acceptable in one legal system could be challenged in another under claims of unfair practices or overreach.

Another important legal perspective comes from statutes that address computer misuse. Laws targeting unauthorized access are intended to secure technical systems from disruptive activities. When an automated tool overwhelms a server causing operational paralysis, it not only violates a website’s terms but potentially triggers statutory penalties. This legal approach serves not just as deterrence but also as a remedy for damage that might be incurred due to such actions.

Yet, it is crucial to approach this issue with an understanding that the current legal framework is both full of problems and subject to rapid change. As more and more aspects of life are digitized, courts and legislators are pressed to update existing laws to account for these digital nuances. This is not an easy task, given the small distinctions between benign data collection for research purposes and more aggressive tactics intended to disrupt services or harvest data without permission.

An interesting development in this field has been the rise of regulatory sandboxes. These are controlled environments where new digital practices can be experimented with under regulatory oversight. The purpose of such sandboxes is to allow policymakers and industry experts to figure a path through the twists and turns of emerging digital practices without immediately resorting to punitive measures. They provide a space to experiment with new ideas, collect evidence, and eventually craft more balanced legislation that protects both innovation and fundamental rights.

Practical Recommendations for Managing Excessive Web Requests

Given the complexities of automated request regulation, both from a technical viewpoint and a legal perspective, it is important for website operators, as well as developers utilizing web data, to take proactive measures. Although many legal risks can appear intimidating at first glance, a careful and methodical approach can help alleviate the nerve-racking concerns associated with such activity.

For website operators, the following are some recommended ways to figure a path through managing high volumes of requests:

- Clear Terms in Service: Establish straightforward policies regarding automated access. Ensure that the language used in these agreements is understandable, and make users aware that exceeding certain thresholds may lead to temporary blocks.

- Rate Limiting: Employ technical methods such as rate limiting to prevent any single user or bot from overloading the system. This not only improves performance but also strengthens the legal position by demonstrating proactive management.

- Regular Monitoring: Continuously monitor traffic to promptly detect and manage unusual surges. This can assist in distinguishing between legitimate traffic and potentially disruptive automated actions.

- Legal Audit: Have legal experts periodically review the terms of service and policies related to data access. Such audits can help ensure that the contractual provisions align with current legal standards and best practices.

- User Notifications: Inform users of the impact of exceeding usage limits. Transparent communication helps in preventing disputes and in managing expectations.

For developers and researchers, it is equally important to be aware of the boundaries set by web platforms:

- Compliance with Terms: Always ensure that your automated processes abide by the platform’s terms of service. If necessary, reach out for permission or use official APIs that govern data access.

- Ethical Data Collection: Consider the wider public good when collecting data. Avoid practices that might harm the service’s stability or exploit the data beyond the intended purpose.

- Transparent Methodologies: In cases where data collection is for research, be clear about the methodology and respectful of privacy considerations. This builds trust with both the data provider and the broader community.

- Collaboration with Legal Teams: Consult with legal advisors to ensure that the processes are robust and that any potential legal pitfalls are addressed ahead of time.

- Adaptive Strategies: Technologies and legal standards evolve. Developers should build systems with flexibility in mind to pivot quickly if regulations change.

The table below summarizes these practical recommendations:

| Stakeholders | Recommendations |

|---|---|

| Website Operators |

|

| Developers and Researchers |

|

Understanding the Tension Between Innovation and Legal Boundaries

One of the nerve-racking issues in the digital domain is striking the right balance between fostering innovation and imposing necessary legal controls. On the one hand, platforms that enable open data exchange spur new business models, enhance consumer experiences, and drive academic research. On the other, unchecked automated activities can lead to the abuse of services, compromise security, and even infringe on intellectual property rights.

This tension becomes particularly visible when considering how misusing automated requests can curtail not only a website’s functionality but also the broader digital ecosystem. In many ways, the server’s plea of “Too Many Requests” is a call for moderation—a signal that while technology is capable of absorbing vast amounts of data, its infrastructure has limits, both technically and legally.

Even though the discussion is often full of problems and complicated pieces, it is essential to recognize that legal frameworks exist to provide guidelines for responsible behavior online. Traditional legal concepts—such as contract law, property rights, and consumer protection—must now be reinterpreted in an environment where algorithmic decision-making and automated data collection are the norm.

The reality is that many current laws were drafted before the digital revolution took off in full force. As a result, lawmakers and regulators are forced to work through small distinctions and fine shades of meaning to adapt these statutes to the modern context. While this can appear intimidating at first, there is also an opportunity for a collaborative approach that includes multiple stakeholders—ranging from tech companies to civil rights groups—to shape legislation that is both fair and adaptable.

One of the promising developments in this area is the increasing engagement between technology experts and legal scholars. Conferences, working groups, and public consultations are becoming more frequent. These forums allow for a comprehensive discussion about how to manage digital resources in a way that minimizes harm while promoting innovation. Although the current legal environment is on edge with tension, many believe that a more cooperative approach will lead to better outcomes in the long run.

Insights from Recent Case Studies and Expert Opinions

Recent cases have provided valuable insights into the legal issues surrounding automated requests. One notable example comes from disputes over data scraping in the media and entertainment industries. In some cases, news organizations that have attempted to automate data-gathering processes were met with robust legal challenges from content providers. The courts had to carefully get into the fine details of the agreements between the parties, looking at whether the automated processes violated contractual terms or actively undermined the business model of the content providers.

Legal experts have emphasized that the outcome of such cases often hinges on whether the automated activity was conducted transparently and ethically. If a third party was seen to be working through established channels—such as using official APIs or acquiring data under mutual agreements—the legal repercussions were typically milder. By contrast, cases where the automated actions blatantly bypassed the protective measures of a website tended to result in harsher judgments.

Another significant context is in the field of academic research. Some researchers have argued that automated data collection, when done responsibly, falls under the umbrella of fair use or scholarly inquiry. Nevertheless, even in these cases, courts have consistently stressed the importance of respecting website rules. This underscores the notion that while the public interest in data access is key, it does not override the need for clear, consensual agreements between parties.

Expert opinions in this domain highlight the necessity for what can best be described as collaborative self-governance. Legal professionals, technologists, and industry regulators all recognize that a siloed approach to managing automated requests is improbable to succeed. It is only through integrated strategies that stakeholders can start to steer through the small distinctions and hidden complexities of digital law in a manner that protects both innovation and infrastructure.

Below is a bullet-point summary of key expert insights:

- Transparent practices in automated querying are essential to avoid legal pitfalls.

- Legal enforcement should focus on harm prevention rather than on penalizing minor infractions.

- Collaboration between tech companies and lawmakers is necessary to update outdated legal frameworks.

- Moderation and rate limiting remain super important as effective technical measures with legal backing.

- Case-by-case analysis is often required due to the small distinctions present in various legal scenarios.

Future Considerations in a Digital Legal Landscape

Looking ahead, the interplay between automated web requests and the legal systems that regulate them promises to become even more significant. With the accelerated pace of technological advancement, we are likely to witness even more nerve-racking challenges as current legal frameworks are tested by new digital practices.

One of the areas that merit close attention is the evolution of artificial intelligence and its impact on automated processes. As AI-powered tools become more advanced, the volume and sophistication of automated requests are likely to increase. This raises several questions: Will existing legal models be sufficient to handle these new twists? How can regulators create rules that do not stifle innovation while still preserving fundamental rights?

Policymakers must work together with industry experts to figure a path forward that is both adaptable and exacting. One promising approach is the aforementioned regulatory sandbox model, which could be expanded to address AI and machine learning applications explicitly. In such a controlled environment, experimentation with automated processes can continue safely while gathering crucial data to inform future legal standards.

Another area of future consideration is international harmonization of laws related to automated data access. Given the borderless nature of the internet, inconsistencies between different legal systems can lead to conflicts and loopholes that are easily exploited. For example, a practice that might be deemed acceptable in one country could be prosecuted in another, creating uncertainty for global companies and researchers alike.

In response to these challenges, we might see the development of international treaties or frameworks that standardize definitions and set minimum requirements for online data collection practices. Such harmonization would not only make it easier to enforce rules consistently but would also help reduce the risk of abuse by ensuring that any exploitation of automated requests is met with a unified response from regulators worldwide.

Moreover, as technology evolves, so too must the roles of legal professionals specializing in digital law. There is a growing need for lawyers who can work across disciplinary boundaries, bringing together expertise in computer science, data management, and traditional legal practice. These professionals will be key in helping organizations understand how to work through the tricky parts of digital regulations while still harnessing the benefits of automation and large-scale data analysis.

The table below outlines some of the key future trends and considerations in the legal regulation of automated web requests:

| Future Trend | Implications for Law |

|---|---|

| AI and Machine Learning | Increasingly complex automated behaviors that require adaptive legal rules and proactive ethical guidelines. |

| International Harmonization | Standardized definitions and treaties to ensure consistency in enforcement across borders. |

| Regulatory Sandboxes | Safe environments to test innovative practices while collecting data that informs future legislation. |

| Interdisciplinary Legal Expertise | The rise of hybrid legal professionals who can bridge the gap between technology and law. |

| Enhanced Transparency Measures | Improved user awareness and adherence to clearly defined terms of service, reducing legal disputes over automated access. |

Concluding Thoughts on the Intersection of Technology and Legal Frameworks

The seemingly mundane “Too Many Requests” error message serves as a microcosm of the larger legal debates unfolding in our digital era. It reminds us that behind every technical safeguard lies a web of legal responsibilities, ethical considerations, and evolving regulatory challenges. While the legal landscape is often riddled with tension and confusing bits, each new case, policy, or technical innovation provides an opportunity to take a closer look at how we manage digital interactions in a fair and balanced manner.

As both legal professionals and technology experts continue to work together, it is essential that we steer through the twists and turns with a focus on clarity, transparency, and fairness. Everyone from website operators to independent researchers has a role to play in this unfolding drama. Clear terms, transparent practices, and a willingness to adapt to new challenges will be the key to finding a path that respects both the rights of individuals and the operational necessities of today’s digital platforms.

Ultimately, the future of digital law will depend on our collective ability to harmonize technological innovation with legal and regulatory measures. This balance is not only crucial for maintaining the integrity of digital services but also for preserving public trust in how data is accessed and used. As we dive in to face these evolving issues, all stakeholders must recognize that each “Too Many Requests” alert is a reminder of the need for measured, careful management of digital resources—a call to action for both industry and law alike.

In the coming years, expect to see more collaborative efforts aimed at ironing out the little twists and subtle differences in regulation. This collaboration will be super important for creating a dynamic legal framework that is both capable of supporting new technological advancements and robust enough to protect critical digital infrastructures. With ongoing dialogue and a commitment to fairness, we can craft policies that manage automated requests gracefully while ensuring that the digital world remains open, secure, and just.

As we conclude, let this discussion serve as both a warning and a guiding light. The challenges posed by excessive automated access are real and immediate, yet they are not insurmountable. By getting into the fine points of our digital practices, embracing clarity in communications, and fostering a spirit of cooperation between legal and tech communities, the promise of a fair digital future remains within our grasp.

Reflect on this: every technical hurdle invites us to reconnect with foundational principles of justice and fairness. The “Too Many Requests” message, therefore, is not just a hiccup in the digital framework—it is a call for recalibration. A recalibration that, if embraced properly, can ultimately strengthen the robust interplay between technology and law, ensuring that as we innovate, we also protect the rights and resources that make our digital society thrive.

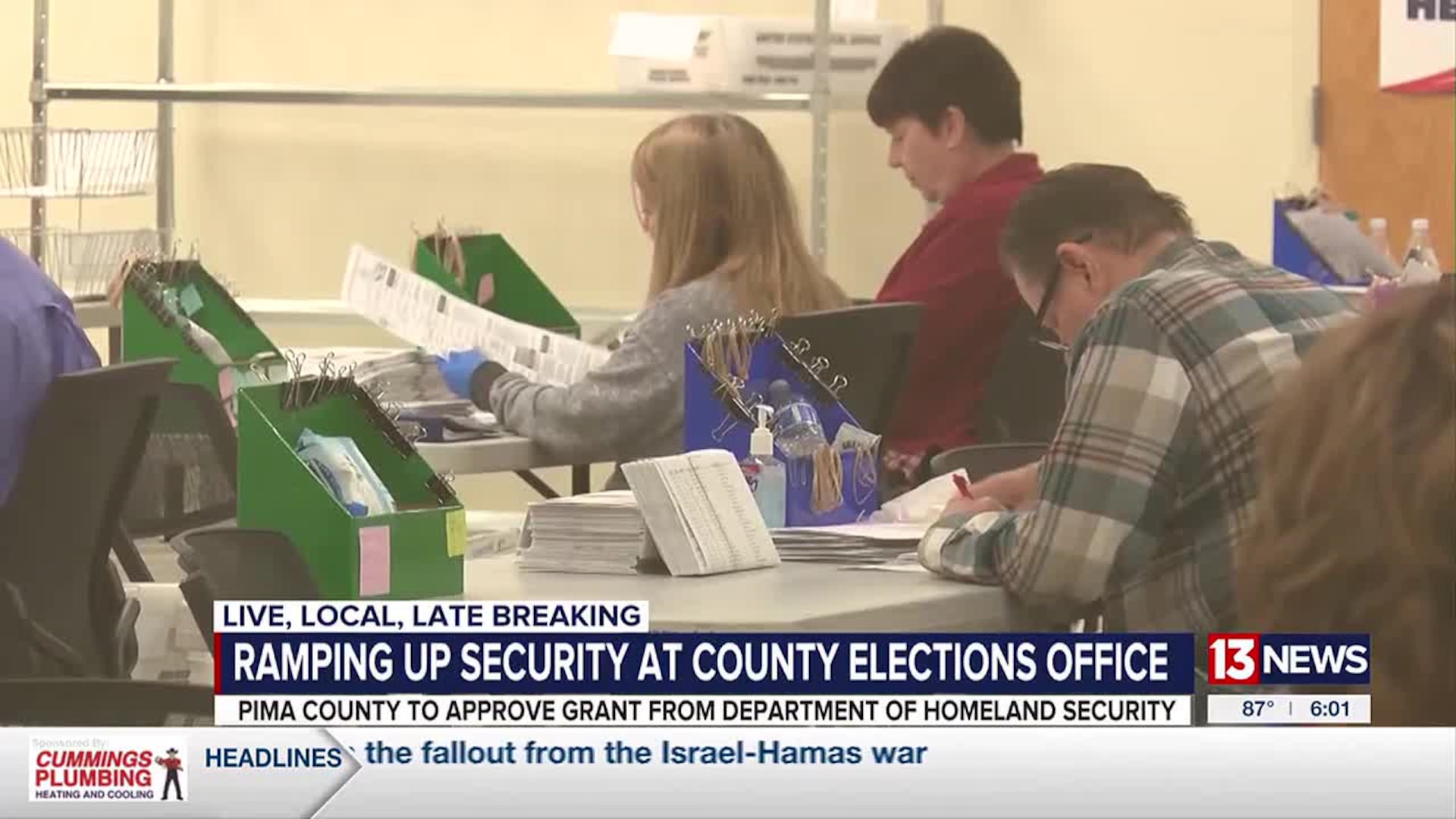

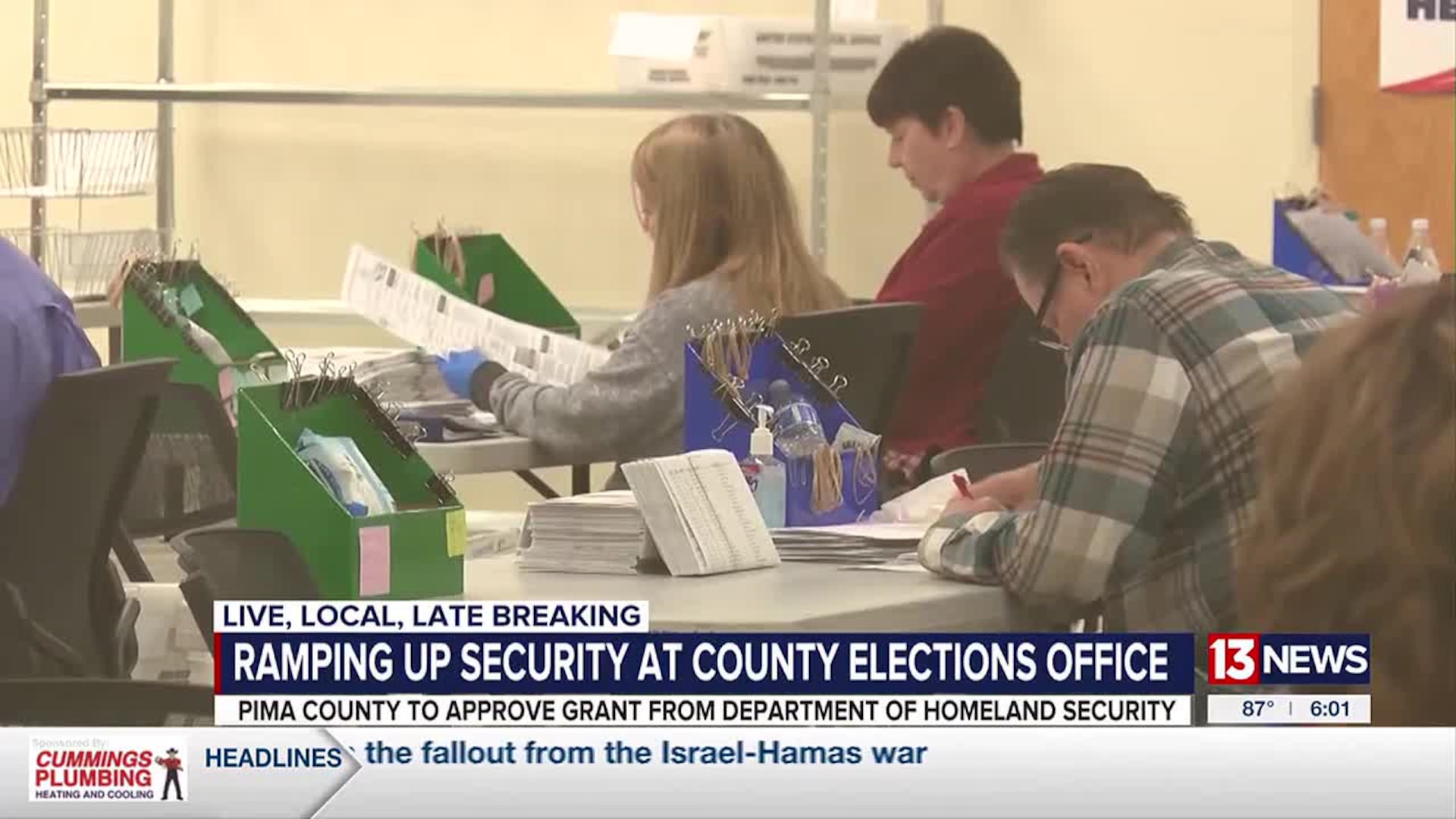

Originally Post From https://www.kvoa.com/news/decision2025/pima-county-fights-threats-to-election-staff-amid-false-claims/article_676bd153-2a2e-49c7-8c36-645e70f9b116.html

Read more about this topic at

What is the deal with “Rate Limit Exceeded” on twitter?

Twitter Rate Limit Exceeded | 6 Instant Fixes

No comments:

Post a Comment